- Female VC Lab

- Posts

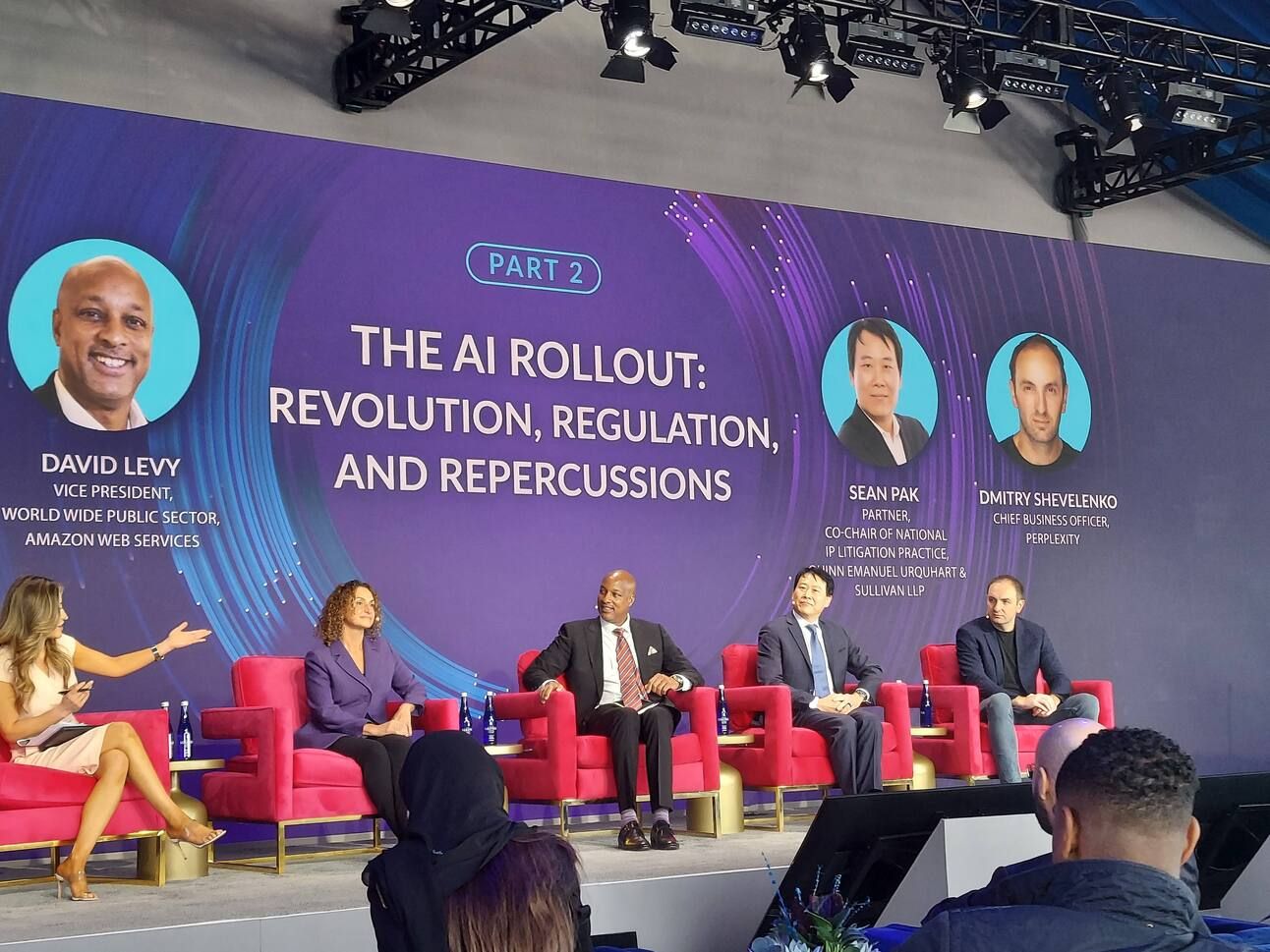

- The AI Rollout: Navigating Innovation, Regulation, and Responsibility in a Rapidly Evolving World

The AI Rollout: Navigating Innovation, Regulation, and Responsibility in a Rapidly Evolving World

As artificial intelligence (AI) reshapes every aspect of modern life—from education and healthcare to law and national security—governments, technologists, and the public are increasingly faced with critical questions: How do we foster innovation while safeguarding society? Who holds the reins when powerful AI tools can both empower and disrupt? And how can we ensure transparency, accountability, and ethical use across diverse industries?

These are the urgent issues explored at “The AI Rollout,” a timely and thought-provoking panel discussion held on May 5, 2025. Featuring leading figures from DeepMind, AWS, Apple, Perplexity, and legal experts specializing in intellectual property and AI regulation, the event brought together public and private sector voices for a conversation that underscored both the promise and the peril of AI.

Below, we’ll break down the key takeaways from the panel and reflect on what they mean for the road ahead.

1. We’re Living Through a Historic Technological Shift

The session began with a recognition of the moment we’re in: a pivotal juncture in the technological evolution of humanity. AI is not simply another tech wave—it’s a revolutionary transformation with far-reaching implications for society, governance, and human interaction.

Lila Ibrahim, COO at DeepMind and one of the top 100 minds in AI in 2023, noted that DeepMind was founded in 2010 with a mission to “build AI responsibly to benefit humanity.” Responsibility, she emphasized, was not an afterthought but a foundational principle. Governments have increasingly turned to firms like DeepMind for guidance on how to make this powerful technology safe.

This marks a shift: AI is no longer just the domain of engineers or entrepreneurs; it’s a matter of public policy, ethics, and national interest.

2. Government and Private Sector Must Collaborate on AI Safety

A recurring theme throughout the panel was the crucial importance of collaboration between public institutions and private innovators. While governments must establish safeguards and accountability frameworks, much of the knowledge and innovation originates from private labs and startups.

As Dimitri Shabanko from Perplexity AI pointed out, “We’re in an era where the models being created are not only more powerful but also more accessible.” This accessibility heightens the urgency for joint oversight. Perplexity, now one of the fastest-growing AI startups, exemplifies how quickly new players can scale—and why agility in regulation is necessary.

Emmanuel Dave Levy, who has worked at AWS and Apple leading public-sector initiatives, argued that adoption and safety are two sides of the same coin. “We can’t afford to let innovation stall, but we also can’t turn a blind eye to the real consequences of misuse,” he said. His unique vantage point bridging tech giants and government gave his remarks particular weight.

3. Transparency and Trust Are the Cornerstones of Progress

One challenge facing the AI industry is a pervasive lack of public trust. Black-box systems, deepfakes, and opaque data practices have all contributed to widespread skepticism. To earn public confidence, panelists agreed, AI companies must prioritize transparency and open communication.

This doesn’t just mean releasing whitepapers—it means explaining how models work, what data they are trained on, and what risks they carry. As Lila Ibrahim stated, “We must put in the work to earn society’s trust, especially as the capabilities of these systems increase.”

Sean Pack, a leading legal voice in intellectual property and AI governance, reinforced this point: “If we don’t have transparency, we won’t have legitimacy.” Legal frameworks must evolve in lockstep with technological capabilities to ensure enforceability, especially as generative AI produces vast amounts of synthetic content.

4. AI Regulation Needs to Be Agile, Not Reactive

While many countries are moving forward with national AI strategies and draft regulations, the panel warned that overly rigid or outdated policies could stifle innovation or push companies offshore. What’s needed, they argued, is agile regulation—frameworks that can adapt to rapid advancements without compromising oversight.

One idea floated was a “regulatory sandbox” model where companies could test new models under guided conditions with oversight from regulators. This would allow innovation to continue while still surfacing potential harms before they reach scale.

There was also discussion around international collaboration. With AI systems increasingly borderless, unilateral regulation is often ineffective. Panelists called for global standards and interoperability—a sort of “AI Geneva Convention” to ensure consistent rules on ethics, safety, and fair use.

5. The Future of Work Hinges on Proactive Training and Inclusion

Beyond safety and innovation, the rollout of AI has profound implications for employment, education, and inclusion. While AI has the potential to boost productivity and unlock new industries, it also risks displacing millions of workers if governments and institutions don’t act now.

Speaker after speaker stressed the importance of workforce reskilling, especially for mid-career professionals and marginalized communities. “If we don’t bring everyone along, the digital divide will become a chasm,” said Levy.

Panelists discussed a variety of strategies, including:

Public-private partnerships to deliver AI training

Apprenticeship programs for high school and community college students

Inclusion targets for historically underrepresented groups in tech

Support for small businesses to integrate AI responsibly

This isn’t just about economic inclusion—it’s also about building trust. A society that benefits broadly from AI is more likely to support its continued development.

6. Legal Challenges Are Emerging—and Evolving

From copyright infringement to biometric privacy, the legal questions surrounding AI are far from settled. Sean Pack offered a sobering reminder that the law is still playing catch-up. “In the absence of legal precedent, we’re relying heavily on interpretation,” he said.

Two pressing legal issues stood out:

Intellectual Property: Who owns content generated by AI? If a model is trained on copyrighted materials, does it infringe upon those rights? The answers are still murky and vary across jurisdictions.

Data Privacy and Consent: AI systems trained on user data raise critical consent issues. Europe’s GDPR has set a high bar, but other nations have yet to implement similarly strict standards.

The legal system’s slow pace creates a vacuum that must be filled by ethical frameworks and corporate responsibility. But as AI becomes further embedded in daily life, court decisions and legislation will eventually catch up—and reshape the industry.

7. Sustainability Must Be Part of the AI Conversation

One of the lesser-discussed but vital themes was environmental sustainability. Training and deploying large-scale AI models consumes significant energy. As the climate crisis accelerates, the industry must reckon with its carbon footprint.

Some suggestions raised:

Optimizing data center efficiency

Using renewable energy sources

Building smaller, more efficient models

Disclosing the energy usage of training processes

The panel agreed that sustainability cannot be an afterthought. “AI should be a force multiplier for sustainability—not an additional burden,” said Ibrahim.

8. We Need Ethical Guardrails—Not Just Technical Benchmarks

The discussion closed on a philosophical but urgent note: AI must be developed with an ethical north star. It’s not enough to simply make systems smarter, faster, or more accurate—we must also make them fair, inclusive, and aligned with human values.

This includes:

Addressing bias in training data

Designing models to prevent harmful outputs

Ensuring AI tools are accessible to people with disabilities

Preventing the use of AI for surveillance or manipulation

Panelists agreed that these questions won’t be solved by engineers alone. They require input from ethicists, social scientists, artists, and everyday users. “AI doesn’t just reflect our values—it shapes them,” said one speaker. “So we have a responsibility to get it right.”

Conclusion: The Road Ahead

“The AI Rollout” panel made one thing clear: the deployment of AI is not just a technical challenge, it’s a societal reckoning. We are being asked—collectively—to decide how this technology will shape our world. Will it empower or exclude? Safeguard or surveil? Transform or disrupt?

We need bold leadership, transparent dialogue, and agile regulation. But above all, we need a shared vision rooted in humanity, equity, and justice.

As AI continues to evolve, so too must our frameworks for trust, accountability, and responsibility. The rollout is already happening. Now it’s up to us to shape what comes next.

Reply